Breaking: Micro LLMs Are Revolutionizing Device Intelligence in 2025

Discover how Micro LLMs are transforming smartphones, wearables, and IoT devices in 2025 with on-device AI that's faster, more private, and energy-efficient. The future is happening now.

The Shift to Intelligence at the Edge

Have you ever wondered why your smartphone has to send data to the cloud for AI tasks, even for simple requests? That frustrating delay when asking your virtual assistant a question or the privacy concerns when your conversations are processed on remote servers? In 2025, these pain points are rapidly becoming history thanks to a technological breakthrough that's quietly transforming our devices: Micro LLMs.

As we navigate through 2025, the integration of artificial intelligence into our everyday devices is undergoing a fundamental shift. No longer confined to cloud servers, AI is moving to the edge—directly onto our smartphones, wearables, and IoT devices. This transformation, powered by Micro LLMs, represents one of the most significant tech trends of the year with far-reaching implications for how we interact with technology.

What Are Micro LLMs and Why Should You Care?

The Power of AI, Miniaturized

Micro LLMs are not simply scaled-down versions of their larger counterparts. They represent a fundamental rethinking of how AI can operate within the constraints of mobile and IoT devices. Unlike traditional Large Language Models that require powerful cloud infrastructure, Micro LLMs are purpose-built for efficiency.

"Micro LLMs are compact, lightweight versions of large language models, designed to run efficiently on edge devices and embedded systems," according to research published on LinkedIn in May 2025. "Unlike traditional LLMs that depend on powerful cloud servers, Micro LLMs offer real-time AI performance with minimal resources."

Source: LinkedIn

These specialized models are optimized for:

- Speed: Responses in milliseconds rather than seconds

- Energy efficiency: Dramatically lower power consumption

- Privacy: Data stays on your device

- Reliability: Works without internet connectivity

- Cost-effectiveness: No cloud computing expenses

The result is a new generation of devices that can think, learn, and adapt without constantly phoning home to data centers—a true paradigm shift in how we experience AI in our daily lives.

The Technical Breakthrough

The development of Micro LLMs has been made possible through several technical innovations:

- Model compression techniques that reduce the size of neural networks while preserving performance

- Quantization methods that use fewer bits to represent model weights

- Knowledge distillation where smaller models learn from larger ones

- Hardware-specific optimizations for mobile and IoT processors

- Sparse computing that activates only relevant parts of the model

According to The Inference Bottleneck report from April 2025, "Edge AI introduces a unique set of constraints: limited computational resources, strict power budgets, and real-time latency requirements." Engineers have had to completely rethink AI architecture to meet these challenges.

Source: HPC Wire

The Ecosystem of Edge Intelligence

The rise of Micro LLMs is creating an entire ecosystem of intelligent devices that work together seamlessly. From smartphones to wearables to home appliances, intelligence is being distributed across our environment in ways that feel natural and intuitive.

According to a 2025 Edge AI Technology Report by Ceva, "Edge AI enables IoT devices to process information right at the source, optimizing routes, minimizing losses, and countering disruptions as they occur." This local processing creates a more responsive and reliable experience across all our devices.

Source: Ceva

The global AI agent market, which includes edge-based solutions, "is projected to grow from USD 5.1 billion in 2024 to USD 47.1 billion by 2030," according to research published by Alvarez and Marsal in May 2025. This explosive growth underscores the transformative potential of this technology.

Real-World Applications Transforming Daily Life

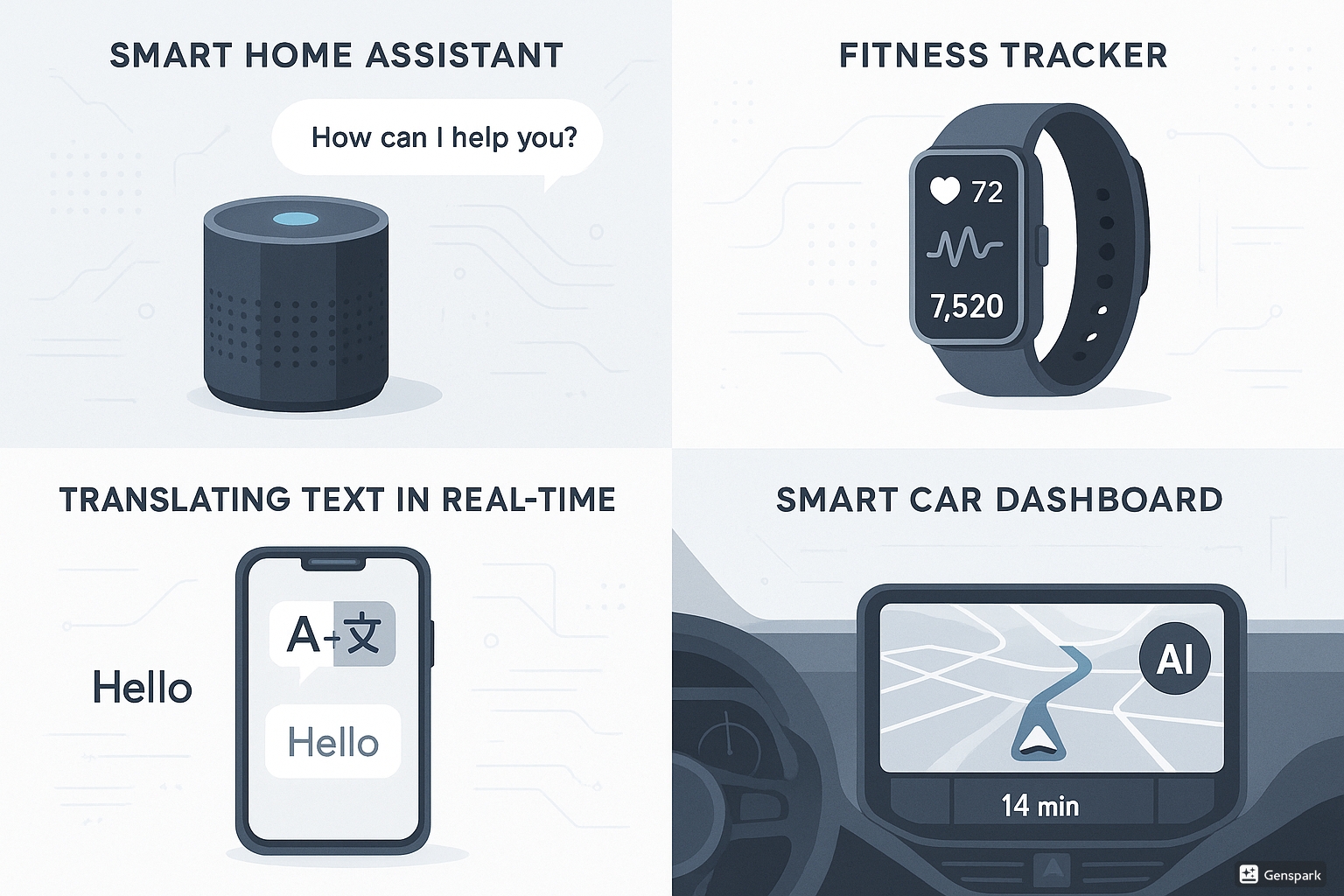

Personal Assistants That Actually Feel Personal

Unlike their cloud-dependent predecessors, these new assistants can:

- Respond instantly to queries without internet lag

- Access personal data without sending it to remote servers

- Function perfectly in areas with poor connectivity

- Provide truly personalized responses based on your device usage patterns

- Operate with enhanced privacy protections

Health and Wellness Revolution

In the healthcare space, wearable devices powered by Micro LLMs are bringing unprecedented capabilities:

- Real-time health monitoring with instant anomaly detection

- Personalized fitness recommendations that adapt to your performance

- Mental health support through on-device sentiment analysis

- Sleep pattern analysis with actionable improvement suggestions

- Integration with medical records for holistic health insights

Smart Homes That Actually Learn

Home automation is evolving beyond simple routines to truly intelligent environments:

- Energy optimization systems that learn household patterns

- Security systems that can distinguish between normal and suspicious activity

- Entertainment systems that predict preferences across family members

- Climate control that adapts to individual comfort preferences

- Appliances that optimize performance based on usage patterns

Automotive Intelligence

Modern vehicles are becoming rolling computers, with Micro LLMs enabling:

- Advanced driver assistance with real-time road condition assessment

- Natural language interfaces for vehicle controls

- Predictive maintenance alerts based on driving patterns

- Personalized comfort settings that adapt to each driver

- Enhanced navigation with contextual understanding of destinations

Privacy and Security Implications

One of the most significant advantages of Micro LLMs is the privacy enhancement they provide. As noted by Medium in a recent analysis, these models are "privacy-focused" by design, allowing "applications to run on smartphones, laptops, and IoT devices" without transmitting sensitive data.

By processing data locally, Micro LLMs:

- Reduce exposure to data breaches

- Eliminate the need to store sensitive information in the cloud

- Comply more easily with privacy regulations like GDPR

- Give users greater control over their information

- Create fewer points of vulnerability for hackers

Computer Weekly noted in May 2025 that this shift represents a fundamental change in the AI business model: "Most LLMs operate on a pay-as-you-go, cloud-based model, and users are charged per token (a number of characters) sent or received." Edge-based models disrupt this paradigm by eliminating ongoing usage fees.

Source: Computer Weekly

The Future of Edge Intelligence: What's Next?

As we look toward the horizon, several trends are emerging that will further advance the capabilities of edge AI:

Collaborative Intelligence

Future systems will seamlessly blend edge and cloud processing, with devices making intelligent decisions about what to process locally versus remotely. This hybrid approach will combine the privacy and speed of edge computing with the depth and breadth of cloud-based models.

Multi-Agent Systems

According to Analytics Vidhya's May 2025 report on AI agent trends, we'll see "More Advanced Multi-Agent Systems" where multiple specialized Micro LLMs work together to solve complex problems. These systems will coordinate across devices to provide more comprehensive capabilities than any single agent could achieve.

Source: Analytics Vidhya

Continuous Learning

Unlike traditional AI models that remain static after deployment, edge models of the future will continue learning from user interactions, adapting to changing preferences and requirements without requiring explicit updates from developers.

Specialized Hardware Acceleration

New processors specifically designed for edge AI are enabling even more powerful capabilities in smaller packages. These specialized chips optimize for the unique computational patterns of neural networks while minimizing energy consumption.

Conclusion: The Democratization of AI

The rise of Micro LLMs represents more than just a technical advancement—it's a fundamental democratization of artificial intelligence. By bringing powerful AI capabilities directly to our devices, this technology is making sophisticated intelligence accessible in contexts and environments where it was previously impractical.

As LinkedIn aptly described it in a May 2025 article, Micro LLMs are the "AI-Democrat for all devices," breaking down barriers to advanced AI capabilities and putting them literally in the palms of our hands.

Source: LinkedIn

The combination of speed, privacy, efficiency, and accessibility makes this trend not just technically impressive but genuinely transformative for how we interact with technology. As 2025 progresses, expect to see an explosion of new applications and capabilities as developers explore the possibilities of truly intelligent edge devices.

Whether you're a tech enthusiast, a privacy advocate, or simply someone who appreciates technology that works seamlessly in your life, the Micro LLM revolution is worth watching closely. The future of AI isn't just in the cloud—it's in your pocket, on your wrist, and throughout your home.